What are the biggest threats associated with AI?

Advancing artificial intelligence brings a lot of promise—to help with everything from curing diseases to making for a better customer service experience.

Most of the talk tends to center on doomsday scenarios or a future where humans don’t work anymore. While there’s no doubt that automation will transform the modern workplace, in many ways it stands to benefit society in several ways. Aside from our natural fear of change, what else is there to fear?

But what are the most significant realistic risks we may be battling in the future? Here’s a look at a few things that humanity, as a whole, might need to worry about.

What are the biggest threats associated with artificial intelligence?

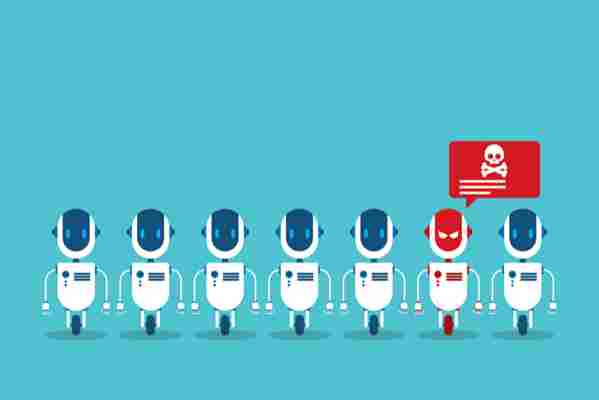

Increased cybersecurity woes

A key issue we face with advancing AI is the automation for vulnerability discovery. It will be increasingly difficult for humans to keep up with the bots — and may leave organizations tasked with handling sensitive data exposed.

One of the biggest concerns about AI is what happens when the technology falls into the wrong hands.

Hackers are learning to perform something called smart phishing , which allows them to leverage AI to analyze huge swaths of data to first, ID potential victims, then create believable messaging that better targets those victims.

As it stands, about 88% of US cybersecurity teams are using AI as part of their strategy. While AI might not be super reliable as a standalone security solution, many professionals believe that it will become a key ingredient in fighting back against time-sensitive threats.

As more bad actors adopt automated hacking methods, the good guys need to train themselves in the language of cyber attacks — ethical hacking of the future, if you will.

AI robot revolts on the International Space Station

Fake news could get faker

Automation is threatening democracy. Or at least some researchers think so.

We’re seeing early signs of the effects that AI can have on how we receive information. From Russian interference in the 2016 U.S. election to the spread of misinformation across social channels, the implication for the future of journalism is pretty scary.

And then there’s the fact that almost anyone can create videos documenting events that did not occur. There’s the issue with DeepFakes, where people are transferring someone’s face to another body—which unsurprisingly has been used to create custom porn by adding a celebrity face to a porn star’s body. Another example is that Obama video developed by researchers at the University of Washington.

What’s more, it seems that automated fake news is on the horizon. Sean Gourley, CEO of Primer, a data mining software company told the MIT Review that we might see fake news offered on a personalized basis. Meaning, reports that are tailored to a person’s interests and essentially, optimized for maximum impact.

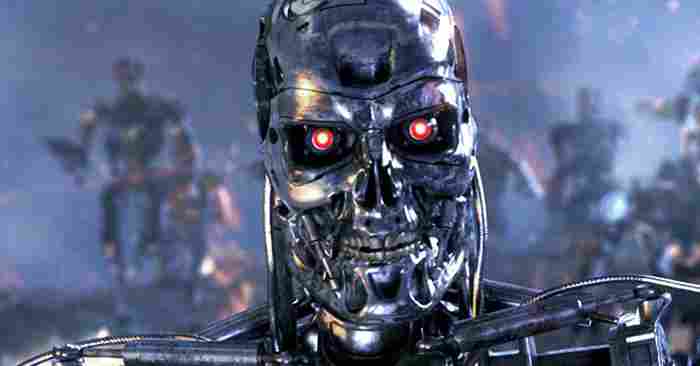

Autonomous weapons

Artificial intelligence would presumably take the dirty work out of carrying out a deadly attack. While this sounds like the stuff of a dystopian thriller, AI weaponry is moving from nightmare to reality.

As it stands, there are no weapons that can specifically target a person. Over the past several years, the UN has debated whether to consider banning lethal autonomous weapons systems, or LAWS, outright.

Last year, CEOs from 130 AI companies signed an open letter to the UN urging them to ban autonomous weapons before they become an unfortunate reality.

But given there are already war drones and weapons that can track incoming missiles, we’ll need to figure something out ASAP.

Our biases could spread

AI is known to pick up human biases, intentional or not. We’ve seen this play out in the Microsoft chatbot experiment—remember Tay? And, again in Amazon’s recruiting AI test, where, the algorithm somehow learned to weed out job applicants who included the word “women’s” in their resumes.

Unfortunately, one of the biggest risks of using AI is that it will reinforce stereotypes , many of which, we weren’t consciously carrying around. Take, for example, this ProPublica investigation into a commonly used software platform that predicts future criminals — and placed black people at a higher risk of committing violent crimes.

The issue here is, we can (hypothetically) teach our AI to make the right choices, but it’s a challenge to identify these biases until they happen.

Additionally, when machines must make their own decisions, they sometimes behave in ways that their programmers don’t understand . Meaning, it’s almost impossible to know how the bot got from point A to point B in the event of a bad decision.

Wrapping Up

Considering the risks of AI means looking at a wide range of problems that aim to upend humanity as we know it.

From the spread of misinformation to the risk of devastating physical harm, not to mention the whole business about our future employment prospects, there will need to be a massive global effort to keep AI from falling into the wrong hands.

While it seems the AI companies themselves take social responsibility seriously, it’s hard to predict how this will play out on the global and political level — especially when you consider most people don’t exactly understand the technology.